Interspeech 2023 UnDiff: Unsupervised Voice Restoration with Unconditional Diffusion Model

This paper introduces UnDiff, a diffusion probabilistic model capable of solving various speech inverse tasks. Being once trained for speech waveform generation in an unconditional manner, it can be adapted to different tasks including degradation inversion, neural vocoding, and source separation. In this paper, we, first, tackle the challenging problem of unconditional waveform generation by comparing different neural architectures and preconditioning domains. After that, we demonstrate how the trained unconditional diffusion could be adapted to different tasks of speech processing by the means of recent developments in post-training conditioning of diffusion models. Finally, we demonstrate the performance of the proposed technique on the tasks of bandwidth extension, declipping, vocoding, and speech source separation and compare it to the baselines. The codes are publicly available.

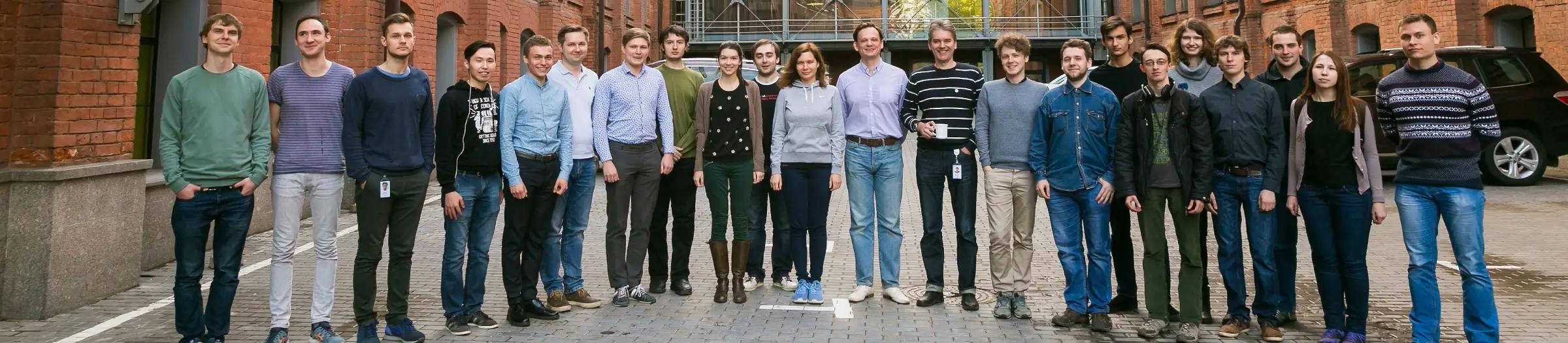

Anastasiia Iashchenko

Anastasiia Iashchenko

Pavel Andreev

Pavel Andreev

Dmitry Vetrov

Dmitry Vetrov